Tokenomics #2: The Dream (and Risk) of Outcome-Based Pricing in AI Software

The price of success is knowing what success actually is… and what it costs to deliver.

Happy (Tokenomics) Tuesday!

One of my favorite books growing up was The Rainmaker. John Grisham's novel is about a young lawyer taking on insurance companies with nothing, but grit and a contingency fee arrangement. There's something brilliant about the economics in that story: the lawyer only gets paid if he wins, and when he does win, he gets paid very well. Perfect alignment of incentives, clear attribution of success, and a business model that puts the service provider's interests directly in line with the customer's outcome.

The outcome-based pricing conversation in regards of AI software is everywhere right now (and for good reason). It promises perfect alignment between buyer and vendor: only pay when value is delivered. In theory, it fixes everything wrong with SaaS seat-based bloat and unpredictable AI usage spikes. Instead of charging for effort, compute, or vague abstractions like "credits," you just charge for the thing the customer wants: results.

But that simplicity hides a deeper complexity.

Here's the real tension: in a sense, usage-based pricing is already a form of outcome-based pricing; just one where the model decides what the outcome is. You pay per token, per function, per interaction. But the "outcome" is inferred, often implicitly. Meanwhile, human-defined outcomes, like "issue resolved" or "churn prevented", are explicit, interpretable, and contract-worthy.

The trouble comes when those two diverge. If the model believes it completed the task, but the human disagrees? Or if a user drops off mid-flow and the vendor counts it as a resolution? That dissonance creates billing disputes, mistrust, and strategic risk.

This post is about that dissonance.

We'll explore the full spectrum of outcome-based pricing from deterministic work-completed to probabilistic success-shared. We'll walk through the four key quadrants of outcome design, define where different companies land, and unpack the risks of pricing outcomes before alignment is achieved.

What Most Companies Are Adopting Today

In the current AI software market, pricing is trending towards the “hybrid” model. Hybrid pricing has taken over because it promises to solve the budgeting chaos of pure usage-based models while maintaining cost alignment with actual AI infrastructure. Most AI companies now combine base platform fees with usage tiers or credits so customers have predictable minimums, but variable final cost. Yet, hybrid models still have issues:

It's still hard to budget (usage spikes can blow through credit allowances)

It doesn't guarantee value (you pay for platform access whether the AI delivers results or not)

It adds complexity (customers now have to understand both subscription tiers and usage calculations)

Outcome-based pricing promises to fix that. But to do it right, you need the model and the human to agree on what success means.

When the Model and the Human Disagree

At the heart of outcome pricing is a question of definition. Who decides what counts as a completed task or a successful resolution?

In usage-based pricing: The model defines success ("I returned a response, so I charged you X.").

In outcome-based pricing: The human defines success ("My problem was actually solved, so I pay you X.").

When these are congruent, pricing aligns beautifully. When they're not, all hell breaks loose. This leads us to the core framework of this post.

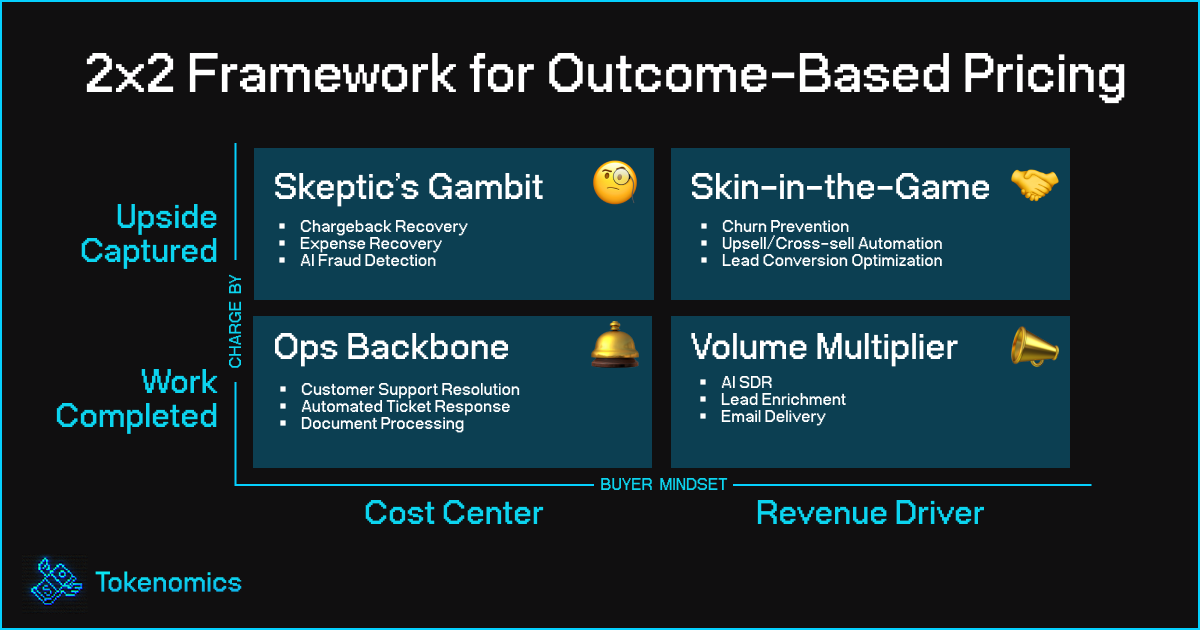

(Yet Another) 2x2 Framework

The implementation of an outcome-based pricing model should consider the buyer’s objective(s). Is your offer a revenue driver? Or is it a cost center?

So this creates two critical dimensions:

What are you charging for?

Work Completed (Task-Based) → Task finished, regardless of impact

Upside Captured (Success-Based) → Pay only when business results land

What’s the buyer mindset?

Cost Center → Budget sensitivity, efficiency metrics

Revenue Driver → ROI-focused, outcome-oriented

Put those together and you get four pricing quadrants:

Ops Backbone (Cost Center × Work Completed)

Think per-resolution pricing in support automation (e.g. Intercom Fin).

Focuses on replacing headcount and improving deflection.

Risk: Optimizes for cost reduction, not necessarily customer satisfaction.

Skeptic’s Gambit (Cost Center × Success-Based)

Classic chargeback or reimbursement models where vendors are only paid when cost avoidance is proven and verifiable.

Example: Chargeflow only takes a cut (~25%) of recovered funds from successful disputes.

Risk: Attribution is clear, but the volume is unpredictable. Cash flow is highly variable and may not justify vendor overhead unless win rates are strong.High burden of proof and slow cycles; trust and attribution are hard.

Volume Multiplier (Revenue Driver × Work Completed)

Pricing tied to agent throughput—e.g. outbound emails, summaries, meetings scheduled.

Scales with activity, but not necessarily conversion.

Risk: Over-incentivizes volume over quality; low gross margin if success rates are poor.

Skin-in-the-Game (Revenue Driver × Success-Based)

The most aligned but riskiest quadrant: pay only when revenue impact is proven.

Seen in performance-based agents, like chargeback wins or upsell automation.

Risk: Attribution complexity, lagged revenue, and long sales cycles.

Each quadrant reveals a different risk-reward profile. Your margins and customer trust depend on pricing to match not just what is delivered, but how it's valued by the buyer.

Modeling the Margins — Four Real-World Scenarios

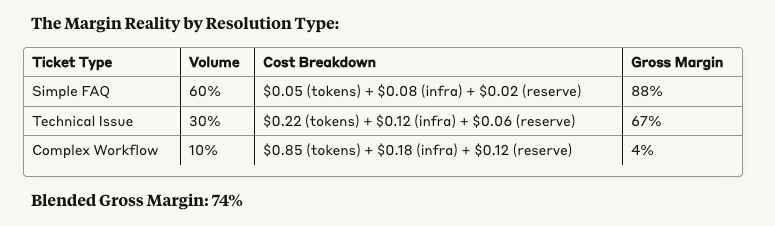

Scenario 1: Customer Support AI

(Work Completed × Cost Center)

The Promise: Intercom Fin charges $0.99 per resolution. Zendesk offers "pay only on successful automated resolutions." The value proposition is mathematical: 10x cost reduction compared to human support costs.

Pricing Model: $1.20 per resolved ticket

The Margin Reality by Resolution Type

Why It's Aspirational: As Decagon discovered, "the vast majority of customers choose per-conversation" over per-resolution because definition disputes become a recurring nightmare. Customer support remains fundamentally a cost center, buyers optimize for predictable savings, not uncertain value alignment. What counts as a "resolution" becomes a recurring billing dispute, while "conversation handled" is black and white.

The procurement logic is simple: why pay for outcome complexity when you're just trying to reduce headcount costs? Even when customers say they want resolution-based pricing, cost center budgets drive them toward whatever delivers the lowest total spend, usually per-conversation pricing that runs 20% cheaper overall.

Tokenomics Takeaway

Token cost is not the limiting factor. It's edge-case behavior (complex tickets + high escalation). Depending on distribution, margin flexs violently: if "complex" tickets rise from 10% → 25%, blended margin crashes.

Cost center buyers choose simplicity over alignment, even when outcome pricing could theoretically offer better value.

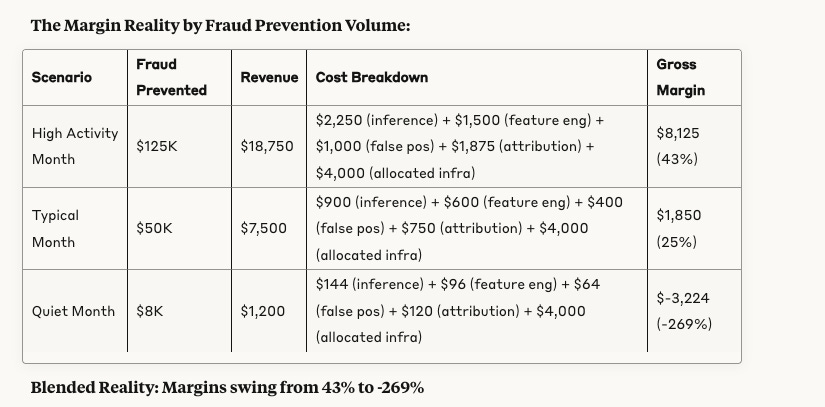

Scenario 2: Fraud Detection AI

(Upside Captured × Cost Center)

The Promise: Perfect outcome alignment. Customers only pay when fraud is actually prevented. Like AirHelp's 35% commission model for flight compensation, the attribution is crystal clear.

Pricing Model: 15% of fraud losses prevented

Why It’s Aspirational:

Success-based pricing sounds like a win-win. But fraud is not a binary outcome like a lawsuit, thereby it’s probabilistic. You still pay to run the model during quiet months. And every false positive comes with a cost (missed revenue, user friction).

What We Actually Learn From Modeling It:

Revenue depends on fraud volume you can't predict or control.

Fixed costs don't scale down because infrastructure is always running an evaluating for fraud.

False positive tax compounds: every legitimate transaction blocked creates customer service costs and lost revenue that you subsidize, but don't get paid for.

Customer’s overall fraud prevention ecosystem improving means less fraud to prevent and monetize.

Attribution infrastructure is expensive, proving you earned commission requires costly reporting that eats into already thin margins.

Volatility compounds, monthly swings from 43% to -269% margin make financial planning impossible and investor confidence fragile.

Tokenomics Takeaway:

You've tied revenue to external factors completely outside your control. Quiet fraud months aren't just lower revenue, they're losses while infrastructure costs continue.

You're exposed to seasonality, economic cycles, and ironically, your own success (less fraud = less revenue). This model only works if you can guarantee consistent baseline fraud activity.

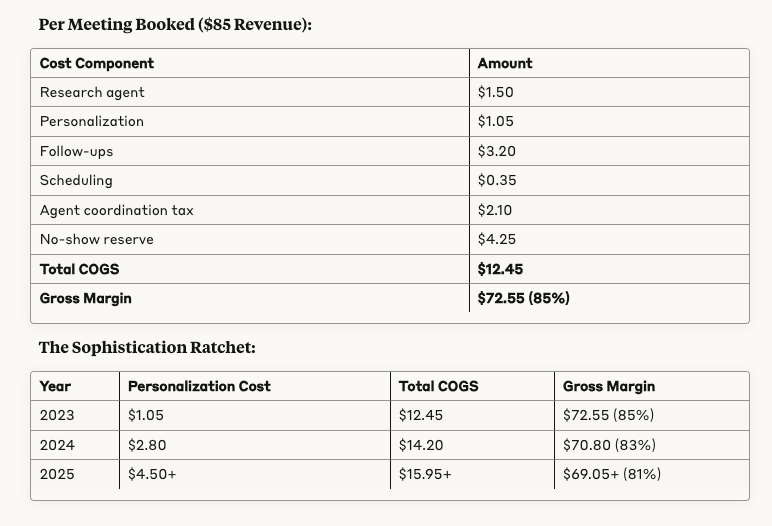

Scenario 3: Sales AI SDR

(Work Completed × Revenue Driver)

Company Profile: AI sales development platform for 200+ B2B companies Pricing

Model: $85 per qualified meeting booked + $3,500/month seat fee

Why It’s Aspirational:

The cost to get the job done keeps rising, but the price per meeting doesn’t. AI output is no longer enough—you need multi-agent orchestration, deep research, and adaptive touch sequences just to stay competitive.

What We Actually Learn From Modeling It:

There’s a personalization arms race. What’s “good enough” today is table stakes tomorrow.

Every agent you add compounds overhead. 20–30% per handoff = death spiral without pricing power.

High margin today doesn’t protect you from tomorrow’s customer expectations.

Tokenomics Takeaway: You're in an arms race where the cost to deliver "good enough" work rises faster than your pricing power. Today's 85% margins assume current personalization standards, but buyer expectations compound annually. Each new competitor raises the bar for what counts as a "quality" meeting, forcing you to add more agents, deeper research, and longer workflows, while your $85 per meeting stays fixed. You're essentially shorting your own industry's innovation curve.

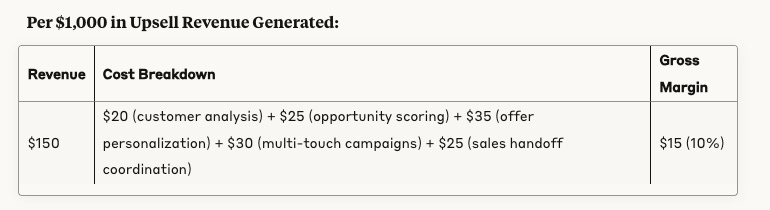

Scenario 4: AI Upsell Engine

(Upside Captured × Revenue Driver)

The Promise: 15% commission on AI-generated upsell revenue

Pricing Model: AI identifies opportunities, personalizes offers, executes outreach, only pay on closed deals**

The Attribution War:

Upsell Success Scenarios:

AI-initiated, AI-closed: 25% of deals, 10% margin

AI-initiated, sales-closed: 60% of deals, split commission → 5% margin

Natural sales cycle (AI gets credit): 15% of deals, -130% margin

Why It's Aspirational: Sales teams fight every attribution decision. Did the AI "create" the opportunity or just identify an existing need? When sales reps close deals flagged by AI, who deserves credit? You're running expensive multi-touch campaigns while sales argues they would have found the upsell anyway. The more successful your AI becomes, the more sales pushes back on attribution.

Tokenomics Takeaway: You're building a business where your primary customer (sales leadership) has economic incentives to minimize your contribution. Every dollar you earn reduces sales team commission credit, creating internal political battles over attribution. Your margins get squeezed not by technology costs, but by organizational politics and revenue sharing disputes. You've created a model where success breeds resistance from the people who control your pipeline.

The Bigger Lesson: It's Not About the Numbers

Here's what this modeling exercise actually reveals (as of course all the numbers were illustrative): Outcome-based pricing isn’t just a billing strategy that you can (or should) implement overnight. It requires deeper thinking to make sure model-determined work will match human-defined value. But when those diverge, even slightly, your margins can vanish, your trust can erode, and your contracts can implode.

The truth is, outcomes live on a gradient ranging from atomic actions to economic impact. The further you travel toward success-based pricing, the more you depend on humans to confirm that value was real, contextual, and worth paying for. That’s where risk concentrates.

Each quadrant we explored tells a different story:

Customer Support AI showed us that resolution counts hide escalating complexity.

Fraud AI reminded us that even perfect success can be invisible during a quiet month.

Sales AI SDR revealed that the bar for "done" keeps rising, but the price doesn’t.

AI Upsell Engine exposed that your biggest customer (sales leadership) has economic incentives to minimize your contribution.

So if you’re building in this world, the real decision isn’t just what to charge for. It’s whose definition of value you trust and how often you expect it to be wrong.